A personal reflection on how I moved from my Debian home to find two new homes with Trisquel and Guix for my own ethical computing, and while doing so settled my dilemma about further Debian contributions.

Debian‘s contributions to the free software community has been tremendous. Debian was one of the early distributions in the 1990’s that combined the GNU tools (compiler, linker, shell, editor, and a set of Unix tools) with the Linux kernel and published a free software operating system. Back then there were little guidance on how to publish free software binaries, let alone entire operating systems. There was a lack of established community processes and conflict resolution mechanisms, and lack of guiding principles to motivate the work. The community building efforts that came about in parallel with the technical work has resulted in a steady flow of releases over the years.

From the work of Richard Stallman and the Free Software Foundation (FSF) during the 1980’s and early 1990’s, there was at the time already an established definition of free software. Inspired by free software definition, and a belief that a social contract helps to build a community and resolve conflicts, Debian’s social contract (DSC) with the free software community was published in 1997. The DSC included the Debian Free Software Guidelines (DFSG), which directly led to the Open Source Definition.

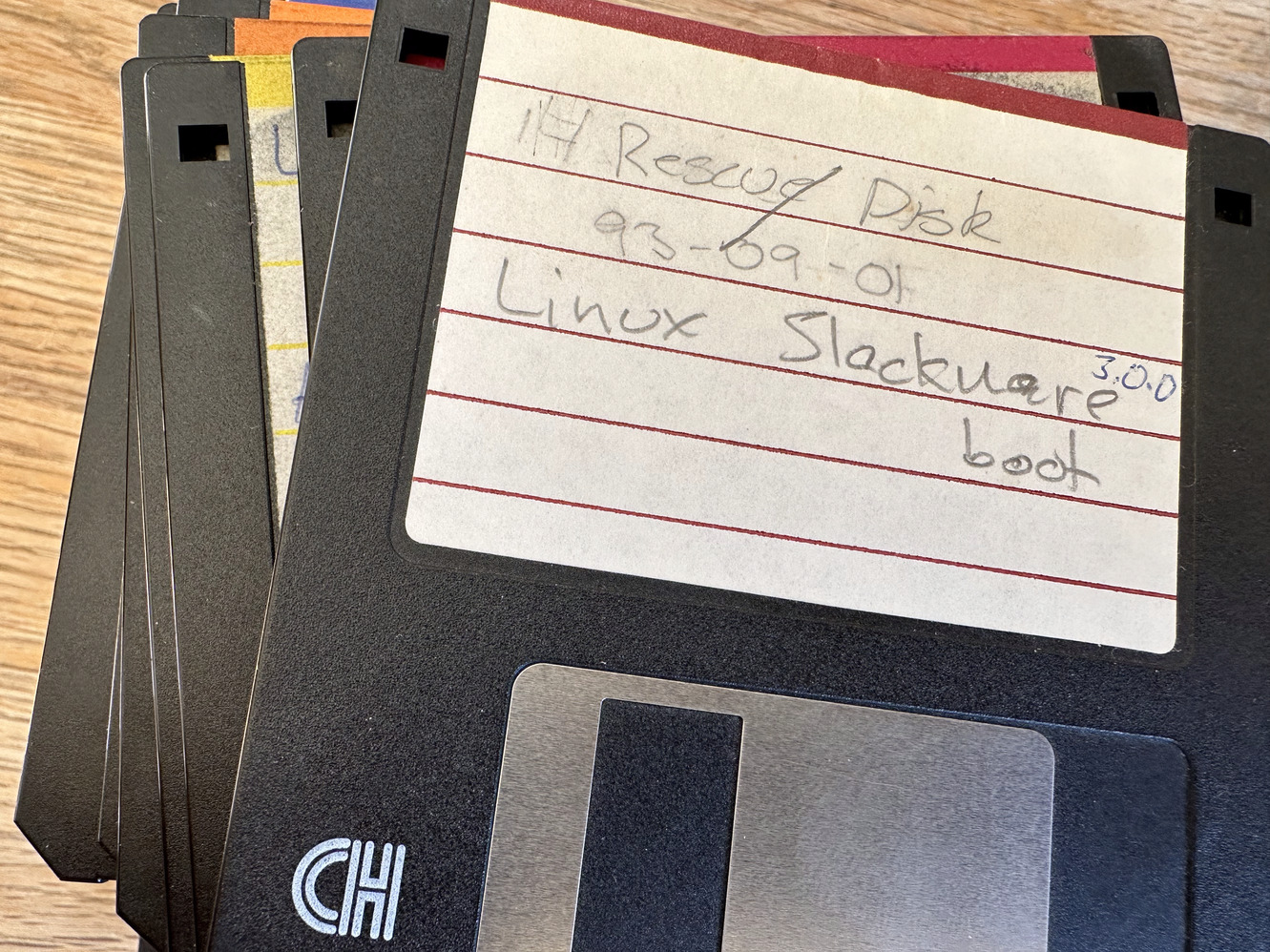

I was introduced to GNU/Linux through Slackware in the early 1990’s (oh boy those nights calculating XFree86 modeline’s and debugging sendmail.cf) and primarily used RedHat Linux during ca 1995-2003. I switched to Debian during the Woody release cycles, when the original RedHat Linux was abandoned and Fedora launched. It was Debian’s explicit community processes and infrastructure that attracted me. The slow nature of community processes also kept me using RedHat for so long: centralized and dogmatic decision processes often produce quick and effective outcomes, and in my opinion RedHat Linux was technically better than Debian ca 1995-2003. However the RedHat model was not sustainable, and resulted in the RedHat vs Fedora split. Debian catched up, and reached technical stability once its community processes had been grounded. I started participating in the Debian community around late 2006.

My interpretation of Debian’s social contract is that Debian should be a distribution of works licensed 100% under a free license. The Debian community has always been inclusive towards non-free software, creating the contrib/non-free section and permitting use of the bug tracker to help resolve issues with non-free works. This is all explained in the social contract. There has always been a clear boundary between free and non-free work, and there has been a commitment that the Debian system itself would be 100% free.

The concern that RedHat Linux was not 100% free software was not critical to me at the time: I primarily (and happily) ran GNU tools on Solaris, IRIX, AIX, OS/2, Windows etc. Running GNU tools on RedHat Linux was an improvement, and I hadn’t realized it was possible to get rid of all non-free software on my own primary machine. Debian realized that goal for me. I’ve been a believer in that model ever since. I can use Solaris, macOS, Android etc knowing that I have the option of using a 100% free Debian.

While the inclusive approach towards non-free software invite and deserve criticism (some argue that being inclusive to non-inclusive behavior is a bad idea), I believe that Debian’s approach was a successful survival technique: by being inclusive to – and a compromise between – free and non-free communities, Debian has been able to stay relevant and contribute to both environments. If Debian had not served and contributed to the free community, I believe free software people would have stopped contributing. If Debian had rejected non-free works completely, I don’t think the successful Ubuntu distribution would have been based on Debian.

I wrote the majority of the text above back in September 2022, intending to post it as a way to argue for my proposal to maintain the status quo within Debian. I didn’t post it because I felt I was saying the obvious, and that the obvious do not need to be repeated, and the rest of the post was just me going down memory lane.

The Debian project has been a sustainable producer of a 100% free OS up until Debian 11 bullseye. In the resolution on non-free firmware the community decided to leave the model that had resulted in a 100% free Debian for so long. The goal of Debian is no longer to publish a 100% free operating system, instead this was added: “The Debian official media may include firmware”. Indeed the Debian 12 bookworm release has confirmed that this would not only be an optional possibility. The Debian community could have published a 100% free Debian, in parallel with the non-free Debian, and still be consistent with their newly adopted policy, but chose not to. The result is that Debian’s policies are not consistent with their actions. It doesn’t make sense to claim that Debian is 100% free when the Debian installer contains non-free software. Actions speaks louder than words, so I’m left reading the policies as well-intended prose that is no longer used for guidance, but for the peace of mind for people living in ivory towers. And to attract funding, I suppose.

So how to deal with this, on a personal level? I did not have an answer to that back in October 2022 after the vote. It wasn’t clear to me that I would ever want to contribute to Debian under the new social contract that promoted non-free software. I went on vacation from any Debian work. Meanwhile Debian 12 bookworm was released, confirming my fears. I kept coming back to this text, and my only take-away was that it would be unethical for me to use Debian on my machines. Letting actions speak for themselves, I switched to PureOS on my main laptop during October, barely noticing any difference since it is based on Debian 11 bullseye. Back in December, I bought a new laptop and tried Trisquel and Guix on it, as they promise a migration path towards ppc64el that PureOS do not.

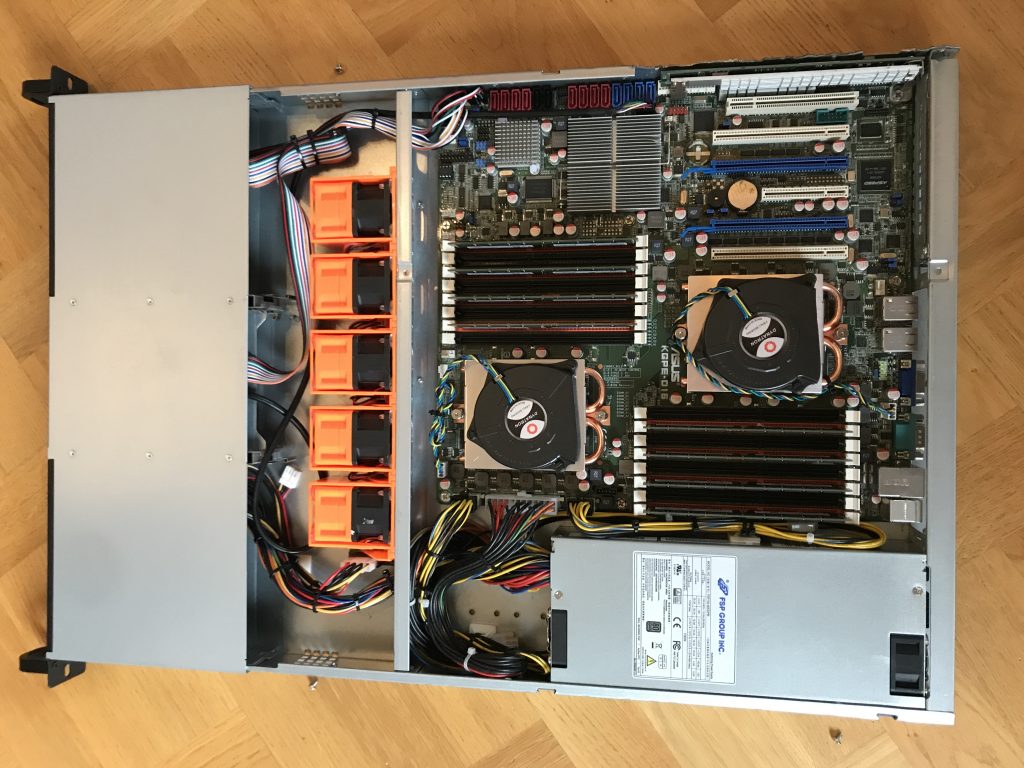

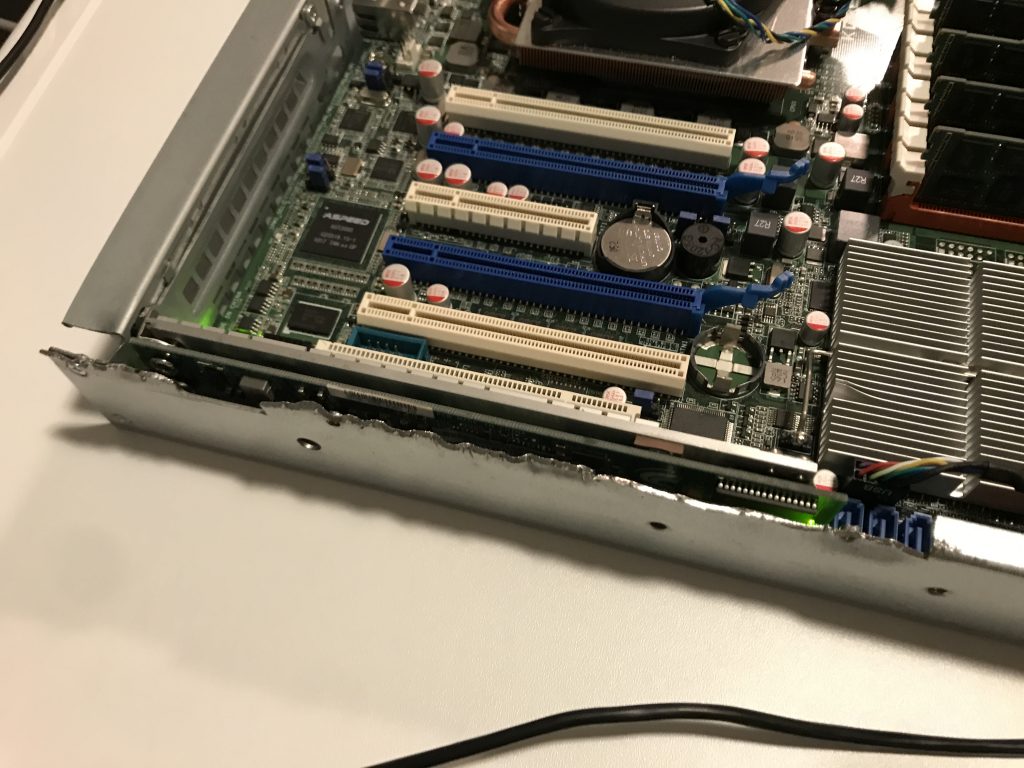

While I pondered how to approach my modest Debian contributions, I set out to learn Trisquel and gained trust in it. I migrated one Debian machine after another to Trisquel, and started to use Guix on others. Migration was easy because Trisquel is based on Ubuntu which is based on Debian. Using Guix has its challenges, but I enjoy its coherant documented environment. All of my essential self-hosted servers (VM hosts, DNS, e-mail, WWW, Nextcloud, CI/CD builders, backup etc) uses Trisquel or Guix now. I’ve migrated many GitLab CI/CD rules to use Trisquel instead of Debian, to have a more ethical computing base for software development and deployment. I wish there were official Guix docker images around.

Time has passed, and when I now think about any Debian contributions, I’m a little less muddled by my disappointment of the exclusion of a 100% free Debian. I realize that today I can use Debian in the same way that I use macOS, Android, RHEL or Ubuntu. And what prevents me from contributing to free software on those platforms? So I will make the occasional Debian contribution again, knowing that it will also indirectly improve Trisquel. To avoid having to install Debian, I need a development environment in Trisquel that allows me to build Debian packages. I have found a recipe for doing this:

# System commands:

sudo apt-get install debhelper git-buildpackage debian-archive-keyring

sudo wget -O /usr/share/debootstrap/scripts/debian-common https://sources.debian.org/data/main/d/debootstrap/1.0.128%2Bnmu2/scripts/debian-common

sudo wget -O /usr/share/debootstrap/scripts/sid https://sources.debian.org/data/main/d/debootstrap/1.0.128%2Bnmu2/scripts/sid

# Run once to create build image:

DIST=sid git-pbuilder create --mirror http://deb.debian.org/debian/ --debootstrapopts "--exclude=usr-is-merged" --basepath /var/cache/pbuilder/base-sid.cow

# Run in a directory with debian/ to build a package:

gbp buildpackage --git-pbuilder --git-dist=sid

How to sustainably deliver a 100% free software binary distributions seems like an open question, and the challenges are not all that different compared to the 1990’s or early 2000’s. I’m hoping Debian will come back to provide a 100% free platform, but my fear is that Debian will compromise even further on the free software ideals rather than the opposite. With similar arguments that were used to add the non-free firmware, Debian could compromise the free software spirit of the Linux boot process (e.g., non-free boot images signed by Debian) and media handling (e.g., web browsers and DRM), as Debian have already done with appstore-like functionality for non-free software (Python pip). To learn about other freedom issues in Debian packaging, browsing Trisquel’s helper scripts may enlight you.

Debian’s setback and the recent setback for RHEL-derived distributions are sad, and it will be a challenge for these communities to find internally consistent coherency going forward. I wish them the best of luck, as Debian and RHEL are important for the wider free software eco-system. Let’s see how the community around Trisquel, Guix and the other FSDG-distributions evolve in the future.

The situation for free software today appears better than it was years ago regardless of Debian and RHEL’s setbacks though, which is important to remember! I don’t recall being able install a 100% free OS on a modern laptop and modern server as easily as I am able to do today.

Happy Hacking!

Addendum 22 July 2023: The original title of this post was Coping with non-free Debian, and there was a thread about it that included feedback on the title. I do agree that my initial title was confrontational, and I’ve changed it to the more specific Coping with non-free software in Debian. I do appreciate all the fine free software that goes into Debian, and hope that this will continue and improve, although I have doubts given the opinions expressed by the majority of developers. For the philosophically inclined, it is interesting to think about what it means to say that a compilation of software is freely licensed. At what point does a compilation of software deserve the labels free vs non-free? Windows probably contains some software that is published as free software, let’s say Windows is 1% free. Apple authors a lot of free software (as a tangent, Apple probably produce more free software than what Debian as an organization produces), and let’s say macOS contains 20% free software. Solaris (or some still maintained derivative like OpenIndiana) is mostly freely licensed these days, isn’t it? Let’s say it is 80% free. Ubuntu and RHEL pushes that closer to let’s say 95% free software. Debian used to be 100% but is now slightly less at maybe 99%. Trisquel and Guix are at 100%. At what point is it reasonable to call a compilation free? Does Debian deserve to be called freely licensed? Does macOS? Is it even possible to use these labels for compilations in any meaningful way? All numbers just taken from thin air. It isn’t even clear how this can be measured (binary bytes? lines of code? CPU cycles? etc). The caveat about license review mistakes applies. I ignore Debian’s own claims that Debian is 100% free software, which I believe is inconsistent and no longer true under any reasonable objective analysis. It was not true before the firmware vote since Debian ships with non-free blobs in the Linux kernel for example.